Beyond the Prompt: Navigating the "Cognitive Debt" of 2026

- Guy Galon

- Jan 24

- 4 min read

In the Customer Success world of 2026, we were promised a renaissance.

The narrative was simple: AI would handle the regular tasks: the QBR decks, the basic enablement content, and the majority of the support tickets, freeing us to become strategic architects of customer value.

But as we lean further into the "Age of Automation," a hidden cost is appearing on the balance sheet of our careers. We are moving faster, but are we thinking more shallowly?

In TheCSCycle, we believe the greatest risk to the modern professional isn’t AI replacing humans, it’s Cognitive Debt eroding our ability to lead with an independent line of thinking.

What is Cognitive Debt?

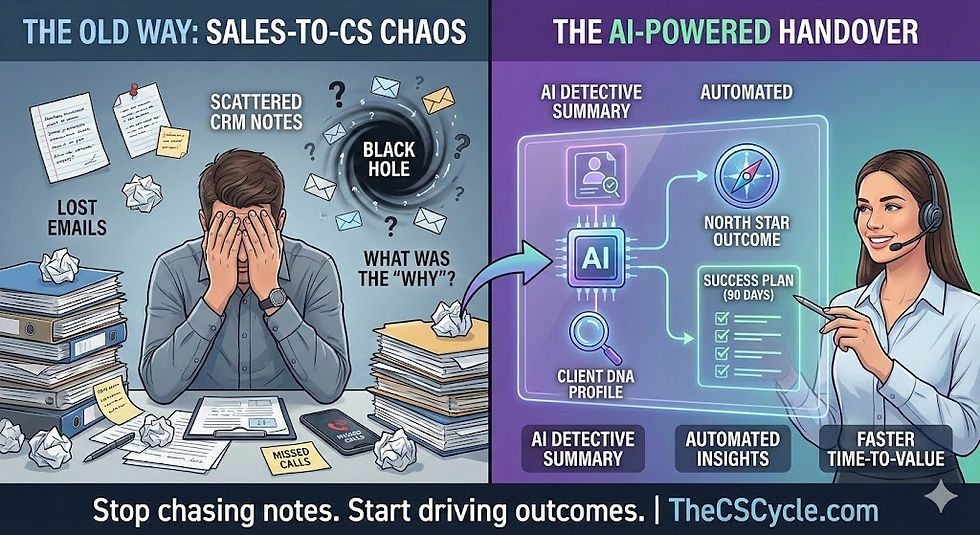

Cognitive Debt is the intellectual deficit that accumulates when we habitually outsource our critical thinking to algorithms. In CS, this looks like a CSM who can generate a 40-slide success plan in seconds but cannot defend the underlying logic when a stakeholder asks, "Why this strategy, and why now?"

When we stop "wrestling" with problems, our mental muscles weaken. We transition from being Value Architects to AI or Algorithmic Operators.

The Anatomy of a Thinking Crisis – The Three Warning Signs

The "Black Box" Strategy: Polished outcomes with no internal "why." If you can't explain the reasoning without the underlying tool, you don't own the strategy.

The Fragility of Knowledge: We can execute complex tasks (like coding or data modeling) via AI, but we lose the "first principles" understanding required to fix things when they break.

The Convergence Trap: When everyone uses the same LLMs to solve the same problems, innovation dies. We settle for the "statistically probable" instead of the "creative mind."

The Evidence: Why "Judgment Atrophy" is Measurable

This isn't just a feeling; it is a documented neurological shift. To understand the stakes for the 2026 workforce, we must look at the "Smoking Gun" research. Here are a few examples of recent studies.

1. The Neurological Disengagement

The MIT Media Lab (2025) used EEG scans to show that when humans use AI for deep tasks, the prefrontal cortex, the brain's CEO, literally "disengages."

This is the biological root of Cognitive Debt. Furthermore, the MDPI (2025) study on the "Cognitive Atrophy Paradox" warns that we are entering a "Bypass Phase" where we skip knowledge construction entirely.

2. The Accuracy Paradox

The famous "Jagged Frontier" study (Harvard/Wharton/BCG) revealed a chilling stat: when tasks get complex, AI-reliant workers are 19% more likely to be wrong.

They stop auditing the machine. This is compounded by the "Cognitive Ease" Paradox, which found that while AI makes us 25% faster, it makes our inquiry depth significantly shallower.

3. The Death of the Unique Edge

Research from Microsoft (2025) found a 30% reduction in diversity of thought when teams over-rely on Generative AI.

We are converging on a "mean" average. As Gerlich (2025) proved, there is now a direct negative correlation between AI usage frequency and independent critical thinking scores.

The Counter-Argument: Is This Just Evolution?

It is worth asking: Are we losing a skill, or simply outgrowing it?

The "Evolutionary Baseline" perspective suggests we are moving toward Distributed Cognition. Just as we stopped memorizing phone numbers when contact lists arrived, perhaps we are liberating our minds for "Meta-Intelligence."

In this view, the human mind isn't necessarily giving up. Instead, we learn to orchestrate complex systems, manage ethical nuances, and navigate high-level strategy that was previously obscured by the 'noise' of manual processing.

We must ask ourselves: Is the 'struggle' of thinking a necessary virtue, or are we simply mourning the loss of a skill that is no longer required for progress in a post-manual era?

The Human-First Protocol: 3 Steps to Retain Your Edge

To lead in 2026, we must move from being passive Consumers to Architects of Intent. This requires introducing "Cognitive Friction" back into our workflows.

1. The "Offline Draft" Rule

Never start with a prompt. For any complex CS strategy or technical solution, spend 15 minutes sketching your logic in a blank notebook or a "dumb" text editor. Build the

skeleton yourself; use AI as 2nd stage to “build” the muscles and skin on your initial outline.

2. Adversarial "Red Team" Prompting

AI is a "Yes-Man." It is programmed to be helpful, which often means it's obedient. It’s the time to challenge it.

The Audit Prompt: "Assume this strategy fails in six months. Find three logical fallacies in your own reasoning and two cultural assumptions you've missed."

3. The Three-Source Rule (Triangulation)

Treat every AI output as a hypothesis, not a fact. Before a client-facing meeting, ensure your "AI-generated insight" is anchored by:

Human evidence 1: Raw, primary data (CRM, financial reports).

Human evidence 2: Lived experience (it can be based on a person’s previous interactions, interviews, intuition, etc.)

Conclusion: Reclaiming the Architect's Seat

In 2026, the competitive advantage isn't speed; we can assume that the machine has already won that race. The advantage is Intent.

Being an Architect of Intent means you are the one providing the ethics, the vision, and the "why" that no algorithm can replicate.

View "cognitive friction" not as an obstacle, but as the spark that keeps your professional perspective sharp.

How are you keeping your "thinking muscles" sharp this year? Are you the Architect or the Operator? Let’s discuss in the comments.

Comments